What Is Latency in Networking and How to Reduce It?

What is latency?

What does latency mean? The time taken to get information from one location on a system or the other is known as latency. Assume London Server A transmits a data packet to California Server B. At 04:00:00.000 GMT, Server A transmits the packet, and Server B gets it at 04:00:00.150 GMT. The difference between these two periods is the amount of lag on this path: 0.150 seconds or 150 milliseconds.Latency is most often assessed between a client computer and database servers. This metric aids developers in determining the speed at which a web application would load for consumers. Although data just on Internet moves at the light speed, latency will never be removed due to distance and delays created by Internet infrastructure technology. However, it may and should be kept to a minimum. Latency causes poor website performance, hurts SEO, and may cause consumers to abandon the site or service entirely.

What causes latency?

Now you have a clear idea of what latency means. Distance, namely the distance among client computers generating requests as well as the servers replying to those requests, is among the primary sources of network delay. Users near Cincinnati, approximately 100 miles distant, will likely get requests from a site housed in a data center in Columbus, Ohio, within 5 to 10 milliseconds. Requests by clients in Los Angeles, approximately 2,200 miles distant, will, on either hand, take slower to arrive, taking nearer to 40 to 50 milliseconds.

A few milliseconds doesn’t seem like much but consider all the back-and-forth communications required to establish a connection between the client and the server, the entire load time and size of the page, and any difficulties with the networking gear the data travels through all along the way. A round trip time is a time it takes for an answer to reach a client’s device following a client request (RTT). Because data must travel in both ways, RTT is equivalent to twice the amount of delay.

Data traveling via the Internet often must pass through many networks. The more network an HTTP answer must traverse, the more chances there are for a delay. Data packets, for example, pass via Internet Exchange Points as they travel across networks (IXPs). Routers must analyze and route the packets of data, and they may need to divide them into tiny packets at times, which all contribute a few milliseconds to the RTT.

Related: what is whmcs used for

Latency vs. bandwidth

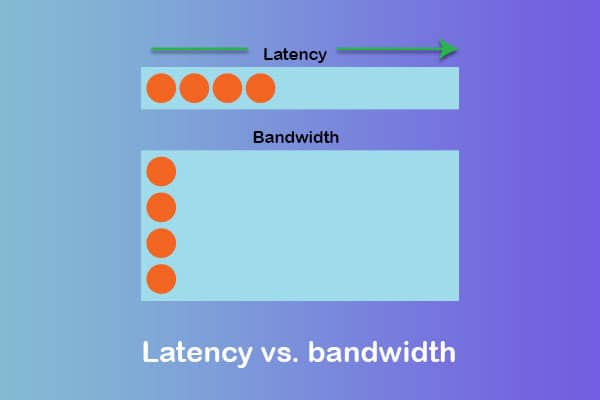

Both latency and throughput are routinely utilized to enhance load times and monitor network performance. Latency includes the time it involves completing an activity, while throughput means the total number of actions that can be completed in a given time. In other words, latency refers to the time it takes for data to be moved, while throughput refers to the amount of data that can be communicated.

Bandwidth is another term that is often used to describe delay. A network’s saturation point or an internet connection is bandwidth. Latency increases when a network’s bandwidth decreases. in another article you can read about what is bandwidth in web hosting.

Imagine bandwidth as a conduit and throughput as the amount of water a pipe can convey in a given amount of time to understand the link between bandwidth and latency. The time taken for the liquid to reach its destination is called latency. The longer it takes for the liquid to reach its target, the smaller the pipe. Similarly, the larger the pipe, the quicker the water will reach its destination. In this approach, bandwidth plus latency have a causation connection.

How to measure latency?

Testing latency varies depending on the program. In some circumstances, measuring latency necessitates using specialized equipment or understanding certain computer instructions and programs; in others, latency may be detected with a timer. My traceroute (MTR), Traceroute, and Ping are just a few tools available to network administrators.

Ping instructions are used to see whether a host machine the user is attempting to contact is up and running. A network administrator measures latency by sending an Internet Control Message Protocol (ICMP) chance to listen to a specific network interface and waiting for a response.

A tracer command may also be used to get latency data. Traceroute shows the path packets traverse over an IP network while noting the delay between each host along the way. To monitor both the delay among devices just on the path and the overall transit time, MTR combines components of both Traceroute and Ping. High-speed cameras may record minute changes in reaction times from inputs to mechanical activity to measure mechanical latency.

Related: How to minify css and js

what is good latency?

latency at 150 ms or lower is considered good.How to improve latency?

As mentioned below, various strategies may be used to minimize latency. Reduced server latency will speed up the loading of your online resources, resulting in a quicker total page loading for the users.– Use HTTP/2 protocol

Another great option to reduce latency is to utilize the ever-popular HTTP/2 protocol. HTTP/2 reduces server latency by decreasing the quantity of the round trips between sender and receiver and allowing for parallelized transfers. Customers may enjoy HTTP/2 compatibility across all of Key CDN’s edge servers.– Reduce the total number of HTTP requests

The number of HTTP requests for photos and other external resources, including CSS or JS files, may be reduced. Assume you’re accessing data from a server different than your own. In such an instance, you’re sending an external HTTP request, which, depending on the quality and speed of the 3rd server, might drastically increase website latency.it’s recommended to read this article about how to switch from http to https.

– Start using a CDN

As we discussed before, the distance between the clients who make a request and the servers that react to that request is critical. By caching materials in several places throughout the globe, a CDN (content delivery network) may assist provide resources closer to users. After those resources have been cached, a user’s request will only need to go to the nearest Point of Presence to receive the data, rather than returning to the source server every time.

For more information about What does CDN mean read this article.

– Using techniques for prefetching

Prefetching resources online does not always result in a reduction in latency. It does, however, increase the perceived performance of your website. When prefetching is enabled, latency-intensive procedures occur in the background while the user browses a certain page. As a result, when visitors click on a later page, operations like DNS lookups have been completed, making the page load quicker.Related: what is google adsense

– Enable browser caching

Browser caching is another caching that may help minimize latency. Certain website content will be cached locally by browsers to reduce latency and the number of server queries. You can learn more about what is website cache, which will allow you to understand how to minimize latency and deliver a better experience to your website visitors.Conclusion

Now you know what is latency all about. As you can see, latency can significantly negatively impact the website’s overall performance. While keeping these facts in mind, you should understand how to minimize latency as much as possible. This is where you may follow the tips that we shared above. You will surely fall in love with the results you can get by reducing latency on your website.